Introduction

In recent years, Personalised Learning has garnered lots of attention and funding from prominent institutions. The US department of Education spearheaded a ‘Race to the Top’ initiative where they “awarded $510 million to 21 grantees” to encourage the innovation of 21st-century data-driven, personalised learning approaches (Sykes et al, 2014; Bulger, 2016). Famous foundations such as the Gates Foundation and Chan-Zuckerberg ventures have also taken a similar path. The former invested $5 billion in learning initiatives, with ~$175 million being targeted at Personalised Learning specifically (Bill & Melinda Gates Foundation, 2010; Bill & Melinda Gates Foundation. 2015; Bulger, 2016). The latter established the Chan Zuckerberg Education initiative and announced that a portion of their pledged $45 billion will go towards Personalised Learning (Zuckerberg & Chan, 2015; Bulger, 2016). This recent surge in interest can be attributed to the developmental leaps in technology owing to Big Data, increased computing power and the ‘successful’ implementation of algorithms. In the abstract, personalised learning refers to a learning system that is adapted to an individual student’s needs, abilities and interests. Its roots can be traced back to figures such as Dewey (1988 [1927], 2001 [1916]), who highlighted the need for a ‘learner-centric’ model of education, and Bloom (1984), who observed that students who received personal one-on-one tuition outperformed other students by ‘two-sigmas’, a phenomenon he attributes to individualisation. What is commonly referred to as ‘personalised learning’ in the 21st century refers to a very specific and technologically-driven instantiation of the theoretical framework, which I henceforth refer to as PL™. The definition of PL™ is ambiguous with various figures using it to refer to different types of systems; Bulger (2016) outlines how these systems range from those that only feature surface-level customisation (e.g. colour choices) to fully-fledged ‘intelligent tutors’. This essay revolves around the widespread modern definition of PL™, as supplied by the US Department of Education. In their 2012 Education Data Mining report, they illustrate PL™ as a process that involves the following:

- A student completes a set of tasks and generates a stream of data while doing so

- Said data is sent to the back-end software

- Analytics is performed on the data based on performance, behavioural and ‘preference-related’ metrics

- The algorithm produces a set of new tasks and resources for the student based on the results of their analysis. Key weaknesses in academic performance are also communicated back to the student.

The emphasis is placed on optimising for effectiveness and efficiency of learning outcomes via personalisation. The report also takes Amazon and Netflix as a parallel of what PL™ should strive to look like, complete with predictive algorithms, individual “learner profiles” and learning maps (Bienkowski, Feng, and Means 2012). In this essay, I explore the positive and negative effects PL™ has on K-12 learning outcomes, using the existing ‘mass education’ system as a benchmark where necessary. I argue that although PL™ has its advantages, our vision of ‘The Future of Education’ should not be based on its current conception. The final part of this essay suggests alternative means in which data and new technologies can be used to enhance learning in conjunction with strategies from alternative learning paradigms. Other important issues such as data privacy and corporate incentives will not be discussed as our discussion centers around the question of learning outcomes.

What positive and negative effects does PL™ have on K-12 learning outcomes?

A widely-touted strength of PL™ by proponents is the increased gains in effectiveness and efficacy it provides for competence-based skill learning. Such gains are said to derive from catering to individual needs and abilities via the use of cutting-edge Big-Data-driven techniques. An example of a PL™ technology is Carnegie Learning’s Cognitive Tutor® Algebra I (henceforth CAT), which is a piece of software that provides individualised content and problem sets to students on various topics of mathematics based on their respective abilities and weaknesses. Pane et al (2014) examine the effectiveness of CAT by randomly assigning pairs of similar schools to either continue with the existing standardised mathematics course or adopting the CAT for use. A comparison of test results between said groups of schools show how the CAT has a positive effect on mathematics learning in the second year of use, “improving the median student’s performance by approximately eight percentage points” (Pane et al, 2014). Note that CAT is not only more effective, but also more efficient. Students will also receive much faster feedback that is personalised from algorithms as teachers are constrained by time and resources (Shechtman et al, 2013). Another example of the learning outcome gains observed from using PL™ is a comprehensive study of 11,000 students at 62 schools conducted by RAND. They observe greater improvements in PL™ student performance in mathematics and reading compared to their ‘traditional school’ counterparts, whom they shared a similar starting overall-student performance with. They also note how a longer use of PL™ is associated with a greater improvement in performance (Pane et al, 2015).

Another positive effect of PL™ is how it supposedly allows for students across-the-board to see an improvement in academic performance as the pace of learning is adjusted for each student based on their varying abilities. This contrasts against the current education system, which primarily benefits students with ‘median ability’ and neglects those on the extremes of the academic performance spectrum (Colangelo, Assouline, & Gross, 2004). Indeed, when comparing PL™-using students with control groups of the corresponding ‘starting achievement’ levels, Pane et al (2015) observe that the majority of the students across all abilities in the former end up surpassing control groups (by mid-50% to 60%) for mathematics and reading (2015). Due to its digital nature, PL™ is also cheap and flexible in that it is inherently not limited by location, costly resources such as good teachers and time; it is also much cheaper to revise and update (Cator & Adams, 2013). This suggests wider accessibility and a more consistent quality of teaching across typical ‘divisors’ like socio-economic status. Thus, the ‘personalisation’ capacity of PL™ seems to facilitate more efficient and effective learning for K-12 students of different abilities and demographics.

However, existing research on the increased effectiveness and efficiency of PL™ has been inconclusive. Many studies in the field face methodological limitations and weaknesses in rigour. For example, Herold (2016) has identified other confounding reasons that can explain the improvements in academic performance found in Pane et al’s study (2015). Firstly, it is important to note that the schools in said study do not solely use PL™. They also employ many of the techniques and systems found in traditional schools, “such as grouping students based on performance data”. Furthermore, potential confounding factors were introduced by the non-random selection of schools to participate in the PL™ pilot scheme. Said participant schools are “charters that received competitive grants”, and hence most likely had better educational resources and infrastructures in place than the average school (Herold, 2016). A 2017 replication of the study by RAND also yielded mixed results with improvements in student performance being lower than that found in 2015 and being only statistically significant in mathematics (Pane et al, 2017). Studies of CAT have also yielded inconclusive and mixed results. Following the evaluation of numerous CAT studies by the US Department of Education against What Works Clearinghouse evidence standards, only one out of 14 examined studies were said to meet the appropriate standards of rigour (What Works Clearinghouse, 2009). Additionally, the previously-discussed CAT study by Pane et al (2014) note how the positive effects observed for CAT are only statistically significant for students in high school. Moreover, the ‘CAT-assigned’ schools adopted a form of blended-learning where CAT is only used two days of a week with teacher supervision; the traditional curriculum is followed on the other days. Thus, existing studies that espouse the effectiveness and efficiency of PL™ need to be taken with a grain of salt.

PL™ places a large emphasis on ‘measurement’ and what is ‘quantifiable’. It is important here to distinguish between two key types of measurement employed by PL™: 1) those that measure the effectiveness of the learning tools and systems and 2) those that measure student learning outcomes. The former lends a huge advantage to PL™, which can use the data collected and generated, such as time duration of student engagement, to iterate and improve on existing systems by tweaking variables like the number of breaks. This is parallel to how platforms like Youtube experiment with using various metrics such as click-through rate to explore which videos yield the highest user engagement (Covington et al, 2016). Furthermore, the data generated by learning systems related to tool effectiveness can also be used for research on education and learning techniques more generally, helping strengthen theory with empirical evidence, ultimately paving way for novel and transformative findings (Dishon, 2017). However, the measurement of system effectiveness is also partly contingent upon the measurement of student outcomes as the latter is used to gauge the efficacy of the former. This brings us to the second main type of measurement encountered in PL™. Although there are multiple reasons for measuring student learning outcomes, the main reason put forth is typically that of testing for true understanding. However, given the lack of existing metrics that are good proxies for quantifying understanding, an excessive focus on quantifiable units of measurement could shift the focus away from measuring ‘true understanding’ of the content. Not only does this give a distorted image of the student’s understanding (which hence leads to erroneous analysis and poorly-selected recommended resources), it further incentivises students to optimise for said metrics rather than aiming for true understanding à la Goodhart’s law. This phenomenon is already seen with the existing use of grades. As Schinske and Tanner (2014) outline, grades were adopted to increase the productivity and efficiency of institutions rather than to improve student learning outcomes. They highlight how grades are not only an unreliable metric of student learning, but encourage students to prioritise avoiding bad grades over attaining true understanding, ultimately incentivising the avoidance of challenging problems and reducing the joy of learning (Butler and Nisan, 1986; Butler, 1988; Crooks, 1988; Pulfrey et al., 2011). PL™ could exacerbate this effect due to its even greater emphasis on measuring poor proxies for understanding. For instance, similar to Youtube and Netflix, metrics such as time engagement, clicks and views are used to measure student engagement and to some extent academic performance. This is problematic as studies like Macfadyen and Dawson (2010) observed that metrics found in learning analytics, such as time engagement with resources, share no correlation with academic performance. However, note that PL™’s emphasis on the quantification of student learning outcomes is useful for other ‘secondary’ purposes, such as acting as an easy metric for comparison for teachers, employers and higher-education institutions.

Additionally, this myopia encourages both educational institutions and students to focus on improving skills that are easily quantifiable and measurable rather than working out what is important from first principles. This is observed in the studies examined above, which only investigate PL™’s effects on mathematics and reading. However, the scope of K-12 learning extends beyond these two subjects — in fact, it is more than the sum of all classroom subjects. Indeed, PL™ appears to do little for meta-skills and dimensions outside of what we classifically conceive of as academic. Examples of meta-skills neglected by PL™ include learning how to learn and learning how to deal with necessary confusion, which are crucial skills to becoming a self-directed learner and critical thinker. Multiple studies in the learning sciences have highlighted the importance of self-regulation and self-determination for academic success and effective life-long learning (Bulger, 2016; Deci & Ryan, 2000; Ames & Archer, 1988). Self-regulation refers to being effective at learning. It is also known as ‘metacognition’, which also refers to thinking about thinking (Goldman et al, 2015); it involves monitoring one’s own progress, setting goals and devising a strategy for achieving said goals. Self-determination refers to the cultivation of healthy and effective behaviour. By having an algorithm churn out the next steps that a student should take in an almost deterministic and black-box fashion, PL™ is training students to be reliant on the learning system. This ‘robbing’ of autonomy stunts the development of critical skills involving metacognition, self-determination and the making of decisions and adoption of responsibilities. In light of such findings, Goldman et al (2015) argue that an educational system should “promote agency and self-regulated learning”. They attribute this to the observed positive relationship between “agency” and academic achievement in students (Dweck & Master, 2009) and conjecture that this could be due to the greater resilience demonstrated by students when they view themselves as being in charge of their own learning (Butler & Winne, 1995).

Socialisation — another important dimension of learning that is not easily measurable and hence neglected by PL™. Education theorist Dewey highlights the importance of social participation in learning and shaping character, championing activities that encourage interaction with others in the environment over abstract rumination (Dishon, 2017; Schutz, 2001). PL™’s huge emphasis on the individual and its screen-based nature leads to the atomization of the individual. This neglect of the relational is problematic as socialisation is key to the construction of meaning and knowledge, as purported by social constructivists (Vygotsky, 1978). Even an activity such as reading, which we perceive as being isolatory, engages in a ‘diluted’ form of socialisation via cultural transmission. This boils down to fundamentally different beliefs of how learning primarily occurs; PL™ advocates for the passive reception of knowledge versus the social constructivists belief in the (co-)construction of knowledge. This excessive focus of Personalisation™ over socialisation also raises concerns about the fragmentation of a shared social reality. PL™ not only encourages personalisation in pace, but also content, which exposes different students to different information. As McRae (2013) points out, to prevent ‘digital echo-chambers’ from forming, it is crucial to incorporate ideas relating to “critical thinking, diversity and … serendipity” in learning. Socialisation can also have positive effects on individual learning and academic performance. Multiple studies have shown how various forms of collaborative learning such as classroom discussions help develop critical thinking and reasoning abilities as it allows individual students to test their ideas and synthesise said ideas with those of others’ (Reznitskaya et al, 2007; Weber et al, 2008; Corden, 2001). Socialisation aside, other difficult-to-quantify ‘meta-skills’ that PL™ neglects, as outlined by Roberts-Mahoney et al (2016), include “innovation (Blikstein, 2011), creativity, critical thinking, problem solving (Atkins et al. 2010), collaboration, and leadership (Cator & Adams, 2013)”.

Should our current conception of PL™ be held up as the ‘Future of Education’?

Although PL™ has many weaknesses - as highlighted above, this fact should not automatically disqualify it from being a potential vision of what the ‘Future of Education’ should be. PL™ is still in its early days, with details in the framework being iteratively updated based on research findings. However, I argue that we should not hold it up as the ‘Future of Education’ — a transformed, truly “modern” and post-industrial education— as it is merely an extension of the current system with bells and whistles.

Firstly, the means by which students learn are fundamentally the same as those found in the current system; that is, via ‘knowledge-telling’. Knowledge-telling refers to the passive engagement with learning materials without deep reflection or analysis; examples include rote memorization, shallow reading and passive listening. Many studies have shown how this approach is only effective for the retention of facts and other low-level procedural knowledge, ultimately neglecting flexible skills, attitudes and complex knowledge (Lynch, 2018; Goldman & Pellegrino, 2015; Lim, Reiser, and Olina, 2009; Marzano et al., 2001). Indeed, as previously outlined, most PL™ technologies focus on imparting the former rather than the latter. PL™ in its current form merely provides a more efficient and effective system of dumping information rather than fixing the fundamentally flawed design of said system. Other learning paradigms should be considered and explored, including inquiry learning, discovery learning and problem-based learning (Lynch, 2018).

Secondly, despite what its name suggests, PL™ is not truly personalised as it comes from a ‘standardized’ and centralised system of algorithms. The output of PL™ learning systems are limited by the selected algorithm(s) and the available data that is fed into said algorithm(s). If PL™ follows in the footsteps of Amazon and Netflix, clear limits for personalisation are built in. Said firms focus their analysis on aggregate data using techniques such as user grouping, comparison and profiling (Plummer, 2017). Although said techniques are useful for recommendation systems, they are inherently not ‘personalised’. This may not matter in the realm of entertainment, but for K-12 learning, which is crucial for character shaping, we risk molding students into a limited number of standardised archetypes. This issue is a symptom of Personalisation™, a product of our consumerist capitalist society where people ‘individualise’ themselves artificially and superficially. Watters argues that this need for Personalisation™ arises partly from our current system of standardised education, with Personalisation™ acting as a “psychological balm” rather than a solution to satisfy our desires for a unique individual identity (2018). PL™ also claims that it provides a ‘learner-centric’ approach. However, a huge part of what constitutes as ‘learner-centric’, as argued by Dewey, derives from student autonomy. Dewey is a strong proponent for children being actively involved in shaping their learning as he deems it central to the formation of the “democratic citizen” (Dewey, 2010 [1899]; Dishon, 2017). This starkly contrasts against the reality of PL™, which relies on a centralised algorithm for decision-making and is packaged as an end-product rather than taken as an iterative process. As David Hargraeves highlights, “personalising” learning may be a more fitting name than “personalised learning” (2006).

Finally, PL™ places an excessive focus on improvements driven by material technology, all the while neglecting the possibility of improvements deriving from shifts in theoretical learning frameworks, value systems and experimental evidence from the field of education. It shifts the burden of improving learning outcomes from system design and content creation to the algorithms of said system (Lynch, 2018). Rather than confronting the daunting question of what the purpose of education is, PL™ merely beefs up the current system with cutting-edge technology and esteems itself as the ‘Future of Education’. Although one can argue that improvements to systems can be iterative rather than revolutionary, this does not justify EdTech’s entrenchment to existing ideals and limited exploration of other possibilities. I hence call for a return to first principles and a deep reflection of what the purpose of education should be. Given that scholars have been ruminating over said question for several millennia (but to little avail), I concede that it is unlikely that a ‘model’ answer will emerge anytime soon. However, technological innovations should be guided by new creative attempts at answering this question, which involves taking risks, proposing moonshot ideas outside the current system or creatively integrating multiple existing ideas, experimenting, iterating, and so on.

Alternative roles for data and technology in K-12 learning: Where do we go from here?

Despite the current insufficiency of PL™, a complete denunciation of the technological tools and systems it offers would be unproductive for progress. Instead, I call for a reconceptualisation of how data and technology can be integrated into the K-12 education system.

Within the realm of personalised learning, data and algorithms can be used to conceptualize new personalised tools for learning. In lieu of replacing the full education pipeline, they can provide great gains in particular subject-specific and general learning skills. An example of an existing technological tool that serves this function is Anki. Anki is a flashcard program that uses spaced repetition learning to improve retention. It features an algorithm that schedules specific flashcards to be shown on designated days based on the user’s indication of how well they recall the card’s contents; this design is informed by Ebbinghaus’ Forgetting Curve in psychological theory (Ebbinghaus, 1964). Anki has been shown to be very effective for memorization tasks such as the learning of foreign vocabulary and biological facts; when comparing massed learning to spaced-repetition learning, a meta-analytic review found an average effect size of 0.46 in tasks testing for learning (Donovan & Radosevich, 1999). It is therefore possible to envision the outsourcing of retention tasks to Anki, whilst leaving educators and or alternative systems to oversee other domains of learning such as problem solving and collaborative discussion.

A hybrid model of PL™ and teachers can also be used. As Dishon (2017) proposes, keeping humans-in-the-loop allows for more autonomy. He paints an image of how students can get a clearer and more informed picture of their own strengths and weaknesses by looking at their ‘learning data’. Based on said information and deliberations with parents and teachers, students can then decide on which aspects of learning to focus on. When used in such a way, the tools enabled by PL™ can empower student decision-making and self-regulation by arming them with more complete information to operate under. This conception of PL™ hence serves as a data interface rather than a recommender system; however, the latter can also be integrated into the platform. For instance, to preserve autonomy, an algorithm can suggest different paths whilst allowing humans to select their desired one. Given how recommendations are made based on existing data and content, it remains unclear as to whether a reliance on this system would set built-in limits on how personalised the learning could be. Students and teachers should thus be encouraged to also propose paths outside of these choices.

A hybrid human-in-the-loop model is also an exciting prospect as PL™’s infrastructure greatly facilitates experimentation. In our existing mass education model, teachers are deterred from experimenting with elements such as teaching style and content presentation due to time and resource constraints, rigid curriculum, and indirect and lengthy feedback loops (Wiese, 2020). The technological infrastructure and data-driven nature of PL™ removes many such constraints and thus counterintuitively places the reigns of control more firmly in the teacher’s grasp. Teachers could potentially tweak specific variables in the system, such as the frequency of pause-and-reviews, and observe its effects on student learning. The instantly-generated data provides much quicker feedback loops that makes this sort of experimentation more feasible. Experimentation does not have to be limited to teachers; students could potentially have a role to play in tweaking variables and finding the optimal setting for learning.

At first glance, these preconceptions of PL™ appear to offer a leg-up from our mass education system, whose extent of personalisation and autonomy is limited to subject or project choice. Although the use of data and technology could greatly improve existing inefficiencies, content acts as a bottleneck for a truly transformative education system. Instructional material is important, with previous studies showing how using a textbook purported to be in the ‘top-quartile’ could boost student achievement by 3.6 percentile points (Kane, 2016). Whether the learning paths are chosen by educators or algorithms, personalisation is only possible if there exists a diverse amount of high-quality content to support it. An advantage of a centralised digital PL™ system is its infrastructural support for allowing numerous stakeholders to chip in and contribute different kinds of content on the learning platform. Content can be uploaded in real time and is both instantly shareable and update-able, reminiscent of Youtube and Amazon, greatly reducing friction. This sharply contrasts against the content design and sharing systems currently in place. Although teachers occasionally play videos produced by online educators such as 3Blue1Brown in class, most of the curriculum is taught using textbooks. This is problematic as the turnover rate of textbooks is very slow, with most US States having adoption and revision cycles that last 7-10 years (Polikoff, 2018). The use of standard textbooks leaves little room for personalisation and diversification; Content presentation is also limited due to the constraints imposed by the medium. Thus, PL™ appears to be an improvement over the existing system in content diversity and flexibility as well. However, the quality of content (both design and substance) is also very important, requiring a set of new questions to be addressed: Who makes the content? Should it be open to all or only to those with specific qualifications? If so, how will content moderation be implemented?

The current education model involves interactions between students, teachers, a static curriculum and the occasional parental input. Due to the complexity of the PL™ system, this model is no longer a feasible option for a humans-in-the-loop variation of PL™; a new model of stakeholder interaction in education needs to be fashioned.

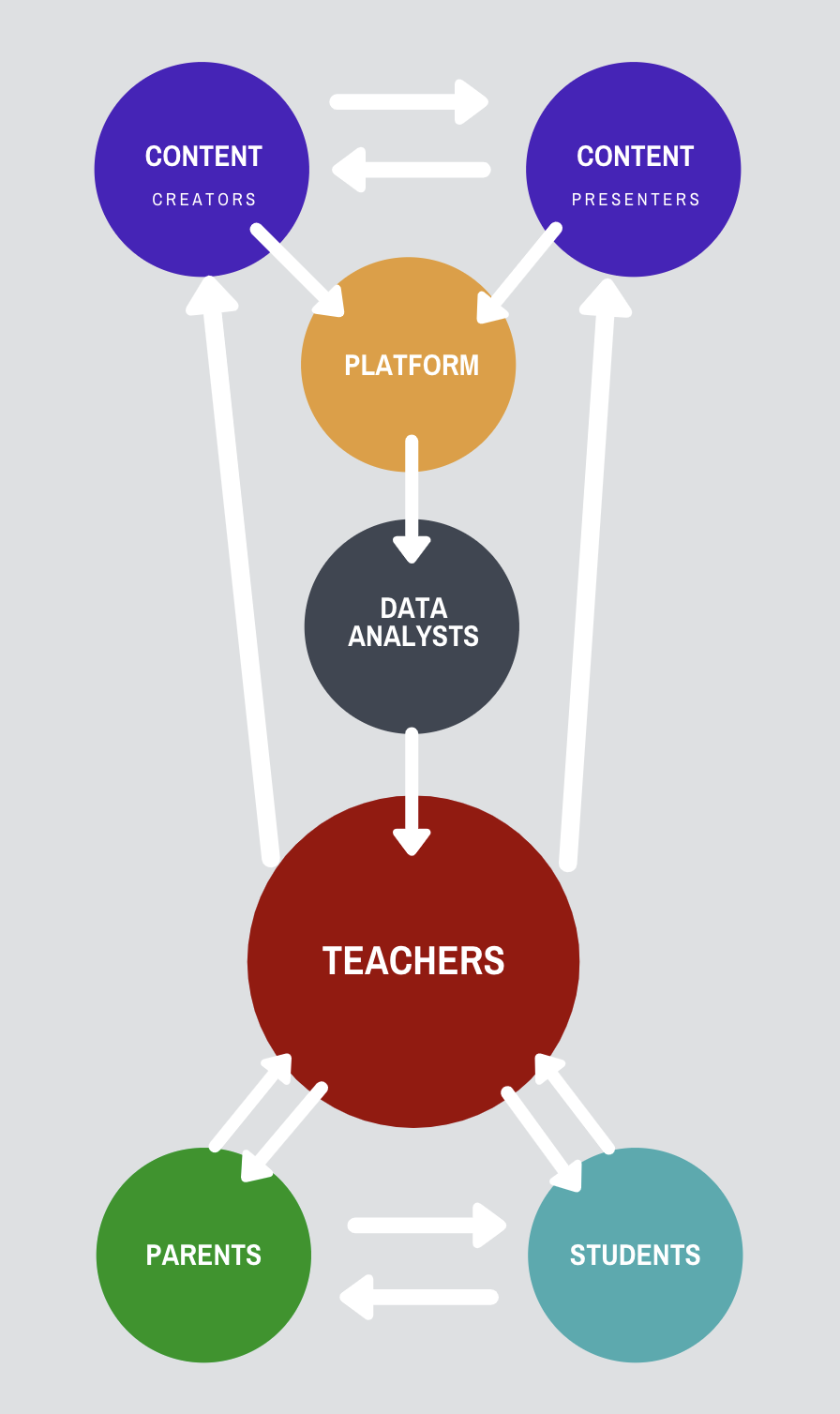

As Figure 1 shows, this could include the addition of content creators and data analysts on top of teachers and students. Content creators are those who prepare all kinds of educational content that can be distributed — from written text to animations to games. They can be further partitioned into content designers, those in charge of substantive content, and content presenters, those in charge of the presentation of content, though a content creator could potentially occupy both roles. Data analysts are those who are in charge of interpreting the data generated and collected by the PL™ platform. Their role is to visualise and present data in a way that is quickly digestible and easily interpretable for teachers. I distinguish this role from that of the teachers’ for two reasons. Firstly, as shown by various surveys conducted in various countries, teachers are already overworked, averaging around 50-60 hours of work a week (Hefferman et al, 2019; U.S. Department of Education, 2018; Banning-Lover, 2016); adding data analysis tasks to their workload could hence decrease their productivity and effectiveness. Moreover, analysing and presenting data versus guiding and shaping the educational path of students involves distinct skillsets.

Figure 1 illustrates how teachers, students and parents are still involved in the humans-in-the-loop process. However, the role of teachers has changed. Rather than doing everything from lesson plan creation to marking scripts, teachers in this model primarily act as a guide to the students. This involves integrating and feeding back information to all components of the system, such as giving feedback to content creators on the type of content they want and drawing insights from data visualisations combined with their own expertise to help guide students.

Despite the added advantages noted above, some of the limitations of PL™ previously discussed still carry over to the humans-in-the-loop variation. For example, existing technologies that use data collection and data-driven systems are primarily screen-based, thus promoting isolation and hindering socialisation. It is possible for teachers to remedy this by scheduling out off-screen learning time, but there remains an inherent tradeoff between either taking advantage of data-driven systems or allowing for socialisation. An ideal system would combine the two as non-mutually-exclusive benefits. Another example of a limitation that remains is the focus on quantifiable skills. Although a PL™ system with humans-in-the-loop is not perfect, it appears to be an improvement over both the current system and the PL™ on its own. It can hence be taken as a starting point and improved through iterations involving experimentation, implementation, and observation.

The opportunities that data and cutting-edge technology present for K-12 is not limited to PL™. There exist vastly different approaches that use alternative learning paradigms and employ data differently. Some creative suggestions I propose include the following: 1) Imagine having students in groups of 4-5, all of them existing in the same Virtual Reality environment. They are given a collaborative task to complete, such as building a car or a cell. These students have different interests ranging from design to engineering at varying abilities. Streams of data for each individual are collected based on how they interact with objects and teammates in the environment. This data is used to update each student’s ‘learning database’, which is composed of the existing mentals models they have. As the structured data is a representation of their understanding of a phenomena, the model itself can be ‘wrong’. It is, however, subject to continual updating and can be reviewed by the students, teachers, parents and an in-house algorithm to identify relevant gaps in their knowledge and next steps. Data on meta-skills such as different aspects of socialisation (e.g. active listening) can also be logged. This scenario roughly fits under the experiential learning paradigm, where there is a focus on projects and problems. It can be supplemented with learning strategies from other paradigms, using tools such as Anki for basic knowledge acquisition and integrating game design principles to boost motivation (Dichev & Dicheva, 2017)

2) Master-apprenticeship relationships were a common method of learning in the past, such as during Classical Greece and the age of the Scholastics, and still exist in areas such as the culinary world. This relationship is valuable as it allows students to learn the implicit knowledge of a tradition of knowledge via close study (Burja, 2019). Mentor-apprenticeship-based learning can make a comeback with new technologies. An example is a mentor-apprenticeship matching application, which matches up mentors and apprentices based on their profiles and data. Another suggestion is reconstructing experts virtually based on the data we have on them (we can also request to collect certain kinds of data), and then deploying them as mentors. This bypasses issues of limited resources and time on the part of the experts, but runs into a wall with issues relating to ethics and socialisation. Again, this will be integrated with other learning methods.

Although these ideas are currently years away from leaving the realm of science fiction, it is important to foster a discourse on what we want education to look like and how data and technology can be leveraged to achieve this vision. Everyone from teachers, students, philosophers to designers should be part of the conversation as they all bring different perspectives to the table.

Conclusion

In conclusion, although PL™ has its benefits for K-12 education notably in domains relating to efficiency and flexibility, it struggles with other areas such as socialisation and meta-skill development. I have argued that adding humans-in-the-loop is a viable option to mitigate negative effects on areas such as autonomy, but may potentially require refashioning the ‘education stakeholder interaction’ model. Moreover, our visions for the future of education do not have to be limited to our current conception of PL™ in terms of both the tools used and the built-in learning paradigm; I propose two futuristic alternatives to jumpstart this conversation. Indeed, rather than reducing the inefficiencies of and beefing up our existing learning system, it is important to work from first principles, asking important questions such as “What do we want from education?” and “How can we leverage technology to achieve said goals?”. The path forward is uncertain and based on our historical track record, it is unlikely that we will reach an ‘optimal end-state’ (if one exists) anytime soon. It is thus imperative for us to retain a sense of epistemic humility and unbounded curiosity, and to embrace the iterative, experimental and creative process that lies ahead.

References

Ames, C. and Archer, J. (1988). “Achievement goals in the classroom: Students’ learning strategies and motivation processes.” Journal of Educational Psychology, 80(3), 260-267.

Atkins, D., J. Bennett, J. Brown, A. Chopra, C. Dede, B. Fishman, L. Gomez, et al. 2010. National Education Technology Plan (ED-04-CO-0040,). Alexandria, VA: SRI International.

Banning-Lover, R. (2016, March 22). 60-hour weeks and unrealistic targets: teachers’ working lives uncovered. The Guardian. Retrieved from https://www.theguardian.com/teacher-network/datablog/2016/mar/22/60-hour-weeks-and-unrealistic-targets-teachers-working-lives-uncovered.

Bienkowski, M., Feng, M., Means, B. (2012) Enhancing teaching and learning through educational data mining and learning analytics: An issue brief. US Department of Education, Office of Educational Technology 1: 1–57.

Bill & Melinda Gates Foundation. (2010). Next Generation Learning. https://docs.gatesfoundation.org/Documents/nextgenlearning.pdf

Bill and Melinda Gates Foundation. (June 2015). Teachers Know Best: Making Data Work for Teachers and Students. Seattle: Bill & Melinda Gates Foundation. http://collegeready.gatesfoundation.org/wp- content/uploads/2015/06/TeachersKnowBest-MakingDataWork.compressed.pdf

Blikstein, P. 2011. Using Learning Analytics to Assess Students’ Behavior in Open-Ended Programming Tasks. doi:10.1145/2090116.2090132 Lak’11 1st international conference on learning analytics and knowledge, Banff, Alberta.

Bloom, B. S. (1984). The 2 sigma problem: The search for methods of group instruction as effective as one-to-one tutoring. Educational researcher, 13(6), 4-16.

Burja, S. (2019, April 23). Why We Still Need Masters & Apprentices. https://www.youtube.com/watch?v=ribdRDO75Rk

Butler, R., Nisan, M. (1986). Effects of no feedback, task-related comments, and grades on intrinsic motivation and performance. J Educ Psychol 78, 210.

Butler, D. L., & Winne, P. H. (1995). Feedback and self-regulated learning: A theoretical synthesis. Review of educational research, 65(3), 245-281.

Cator, K., and B. Adams U.S. Department of Education, Office of Educational Technology. 2013. Expanding Evidence Approaches for Learning in a Digital World (ED-04-CO-0040). SRI International website: http://www.ed.gov/ technology.

Colangelo, N., & Assouline, S. (2009). Acceleration: Meeting the academic and social needs of students. In International handbook on giftedness (pp. 1085-1098). Springer, Dordrecht.

Corden, R.E. (2001). Group discussion and the importance of a shared perspective: Learning from collaborative research. Qualitative Research, 1(3), 347-367.

Covington, P., Adams, J., & Sargin, E. (2016, September). Deep neural networks for youtube recommendations. In Proceedings of the 10th ACM conference on recommender systems (pp. 191-198).

Crooks, T.J. (1988). The impact of classroom evaluation practices on students. Rev Educ Res 58, 438-481.

Dichev, C., & Dicheva, D. (2017). Gamifying education: what is known, what is believed and what remains uncertain: a critical review. International journal of educational technology in higher education, 14(1), 1-36.

Deci, E. L., & Ryan, R. M. (2000). “The ‘what’ and ‘why’ of goal pursuits: Human needs and the self- determination of behavior.” Psychological Inquiry, 11, 227-268.

Dewey, J. (1988 [1927]). The public and its problems. In: Boydston JA (ed.) The Later Works of John

Dewey, J. (2001 [1916]). Democracy and Education. Hazleton, PA: Penn State University Press.

Dewey, J. (2010 [1899]). The School and Society and The Child and the Curriculum. Digireads.

Dichev, C., & Dicheva, D. (2017). Gamifying education: what is known, what is believed and what remains uncertain: a critical review. International journal of educational technology in higher education, 14(1), 1-36.

Dishon, G. (2017). New data, old tensions: Big data, personalized learning, and the challenges of progressive education. Theory and Research in Education, 15(3), 272-289.

Donovan, J. J., & Radosevich, D. J. (1999). A meta-analytic review of the distribution of practice effect: Now you see it, now you don’t. Journal of Applied Psychology, 84(5), 795–805. https://doi.org/10.1037/0021-9010.84.5.795

Dweck, C. S., & Master, A. (2009). Self-theories and motivation. Handbook of motivation at school, 123.

Ebbinghaus, H. (1964). Memory: A contribution to experimental psychology (Henry A. Ruger & Clara E. Bussenius, Trans.). New York, NY: Teachers College.(Original work published as Das Gedächtnis, 1885).

Goldman, S. R., & Pellegrino, J. W. (2015). Research on learning and instruction: Implications for curriculum, instruction, and assessment. Policy Insights from the Behavioral and Brain Sciences, 2(1), 33-41.

Gopnik, A. (2012). Scientific thinking in young children: Theoretical advances, empirical research, and policy implications. Science, 337(6102), 1623-1627.

Hargreaves, D. H. (2006). A new shape for schooling?. London: Specialist Schools and Academies Trust.

Heffernan, A., Longmuir, F., Bright, D., & Kim, M. (2019). Perceptions of teachers and teaching in Australia. Melbourne: Monash University.

Herold, B. (2016, October 19). Personalized Learning: What Does the Research Say? Education Week. https://www.edweek.org/technology/personalized-learning-what-does-the-research-say/2016/10

Kane, T. J. (2016, March 3). Never judge a book by its cover: Use student achievement instead. Brookings Institution. Retrieved from https://www. brookings.edu/research/never-judge-a-book-by-its-cover-use-studentachievement-instead/.

Lim, J., Reiser, R. A., & Olina, Z. (2009). The effects of part-task and whole-task instructional approaches on acquisition and transfer of a complex cognitive skill. Educational Technology Research and Development, 57(1), 61–77.

Lynch, J. (2018, July 16). Why Personalization in Education Misses the Point. Medium. https://medium.com/age-of-awareness/why-personalization-in-education-misses-the-point-aa1ac84048ed

Macfadyen, L. P., & Dawson, S. (2010). Mining LMS data to develop an ‘‘early warning system” for educators: A proof of concept. Computers & Education, 54(2), 588-599. doi: 10.1016/j.compedu.2009.09.008

Marzano, R. J., Pickering, D. J., & Pollock, J. E. (2001). Classroom Instruction that Works: Research-based Strategies for Increasing Student Achievement. Alexandria, VA: Association for Supervision and Curriculum Development.

McRae, P. (2013, April 14). Rebirth of the Teaching Machine through the Seduction of Data Analytics: This Time It’s Personal. Philip McRae, Ph.D. http://philmcrae.com/2/post/2013/04/rebirth-of-the-teaching-maching-through-the-seduction-of-data-analytics-this-time-its-personal1.html

Pane, J. F., Griffin, B. A., McCaffrey, D. F., & Karam, R. (2014). Effectiveness of cognitive tutor algebra I at scale. Educational Evaluation and Policy Analysis, 36(2), 127-144.

Pane JF, Steiner ED, Baird MD, et al. (2015) Continued Progress: Promising Evidence on Personalized Learning. Santa Monica, CA: RAND Corporation.

Pane, J. F., Steiner, E. D., Baird, M. D., Hamilton, L. S., & Pane, J. D. (2017). How does personalized learning affect student achievement? RAND Corporation.

“Personalisation”. (2015). In Wikipedia. https://en.wikipedia.org/wiki/Personalization

Plummer, L. (2017, August 22). This is how Netflix’s top-secret recommendation system works. Wired UK. https://www.wired.co.uk/article/how-do-netflixs-algorithms-work-machine-learning-helps-to-predict-what-viewers-will-like

Polikoff, M. (2018). The challenges of curriculum materials as a reform lever. Brookings Institution. Retrieved from https://www.brookings.edu/blog/brown-center-chalkboard/2016/03/15/textbooks-are-important-but-states-and-districts-arent-systematically-tracking-them/.

Pulfrey C, Buchs C, Butera F (2011). Why grades engender performance-avoidance goals: the mediating role of autonomous motivation. J Educ Psychol 103, 683.

Reznitskaya, A., Anderson, R.C., and Kuo, L.J. (2007). Teaching and Learning Argumentation. Elementary School Journal, 107: 449–472.

Roberts-Mahoney, H., Means, A. J., & Garrison, M. J. (2016). Netflixing human capital development: Personalized learning technology and the corporatization of K-12 education. Journal of Education Policy, 31(4), 405-420.

Schinske, J., & Tanner, K. (2014). Teaching more by grading less (or differently). CBE—Life Sciences Education, 13(2), 159-166.

Schutz A (2001) John Dewey’s conundrum: Can democratic schools empower? Teachers College Record 103(2): 267–302.

Shechtman, N., DeBarger, A.H., Dornsife, C., Rosier, S., & Yarnall, L. (2013). Promoting grit, tenacity, and perseverance: Critical factors for success in the 21st century. U.S. Department of Education Office of Educational Technology.

Sykes, A., Decker, C., Verbrugge, M. & Ryan, K. (2014). Personalized learning in progress: Case studies of four Race to the Top district grantee’s early implementation. Larium Evaluation Group, on behalf of United States Department of Education. https://rttd.grads360.org/services/PDCService.svc/GetPDCDocumentFile?fileId=7452

“TALIS 2018 U.S. Highlights Web Report (NCES 2019-132 and NCES 2020-069)” (2018). U.S. Department of Education. Institute of Education Sciences, National Center for Education Statistics. Available at https://nces.ed.gov/pubsearch/pubsinfo.asp?pubid=2019132.

Vygotsky, L. S. (1978). Mind in society: The development of higher psychological processes. Cambridge, MA: Harvard University Press.

Watters, A. (2018, April 26). Teaching Machines, or How the Automation of Education Became ‘Personalized Learning’. Hack Education. http://hackeducation.com/2018/04/26/cuny-gc

Weber, K., Maher, C., Powell, A., & Lee, H. S. (2008). Learning opportunities from group discussions: Warrants become the objects of debate. Educational Studies in Mathematics, 68(3), 247-261.

What Works Clearinghouse. (2009). WWC Intervention Report: Cognitive Tutor®. Washington, DC: U.S. Department of Education, Institute of Education Sciences.

Wiese, C. (Host). (2020, May 2020). Tyler Shaddix of GoGuardian — The Future of Education, Digital Learning, and Data (No. 1) [Audio podcast episode]. In Build The Future. https://www.buildthefuturepodcast.com/episode/tyler-shaddix-goguardian

Zuckerberg, M. & Chan, P. (December 1, 2015) “A letter to our daughter,” Facebook. https://www.facebook.com/notes/mark-zuckerberg/a-letter-to-our- daughter/10153375081581634/